Smarter Rails apps with AI: how to use LLM in real life

Guillaume Briday

Lyon.rb - June 17, 2025

The plan for today

- Why bother?

- Concepts behind LLMs, RAG and tools

- How to implement it with RubyLLM?

- Deploy your own LLM with Kamal

Why bother?

- It's not about writing more or better code

- It's about adding real value to users

- It's about enhancing your existing Rails application with AI

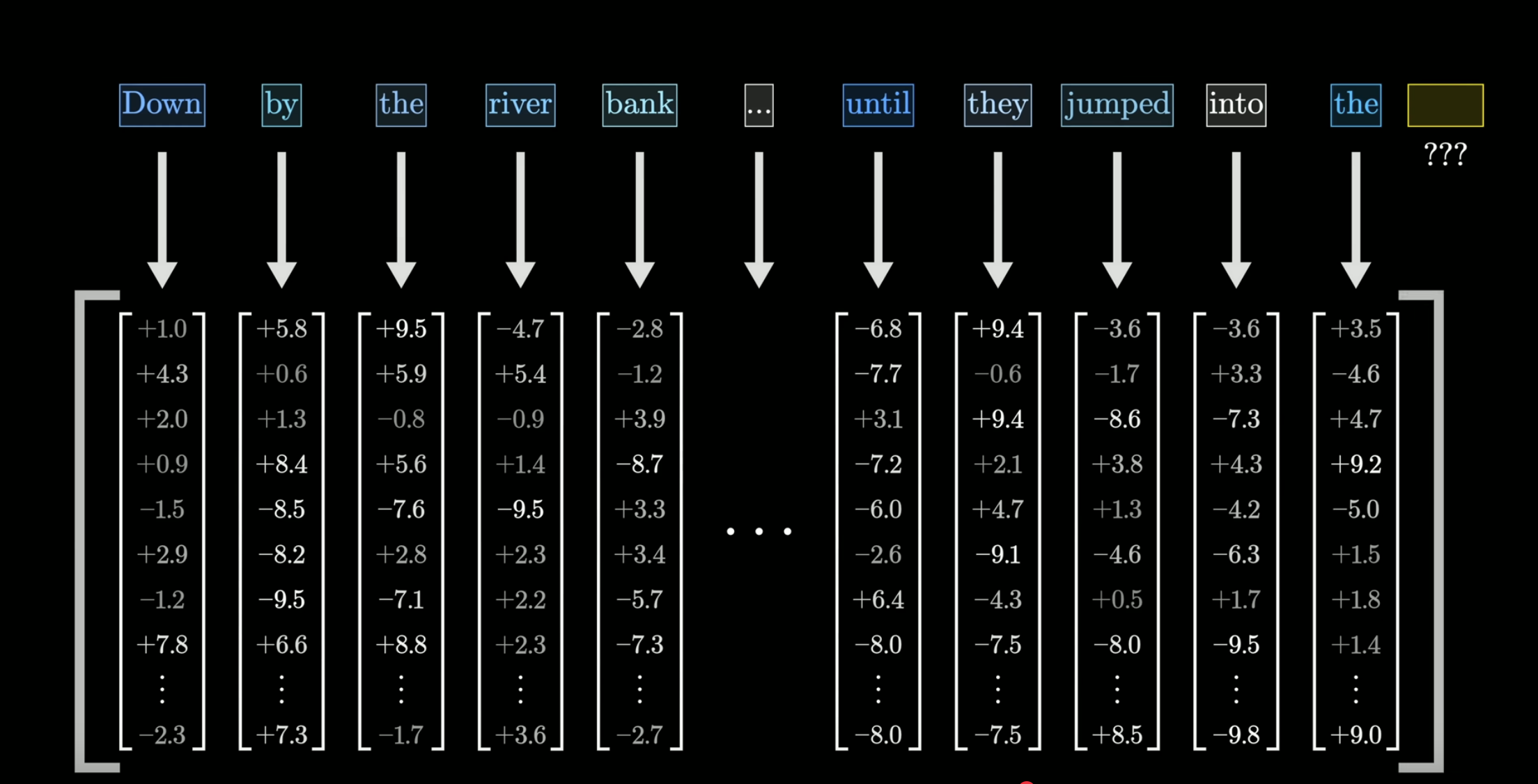

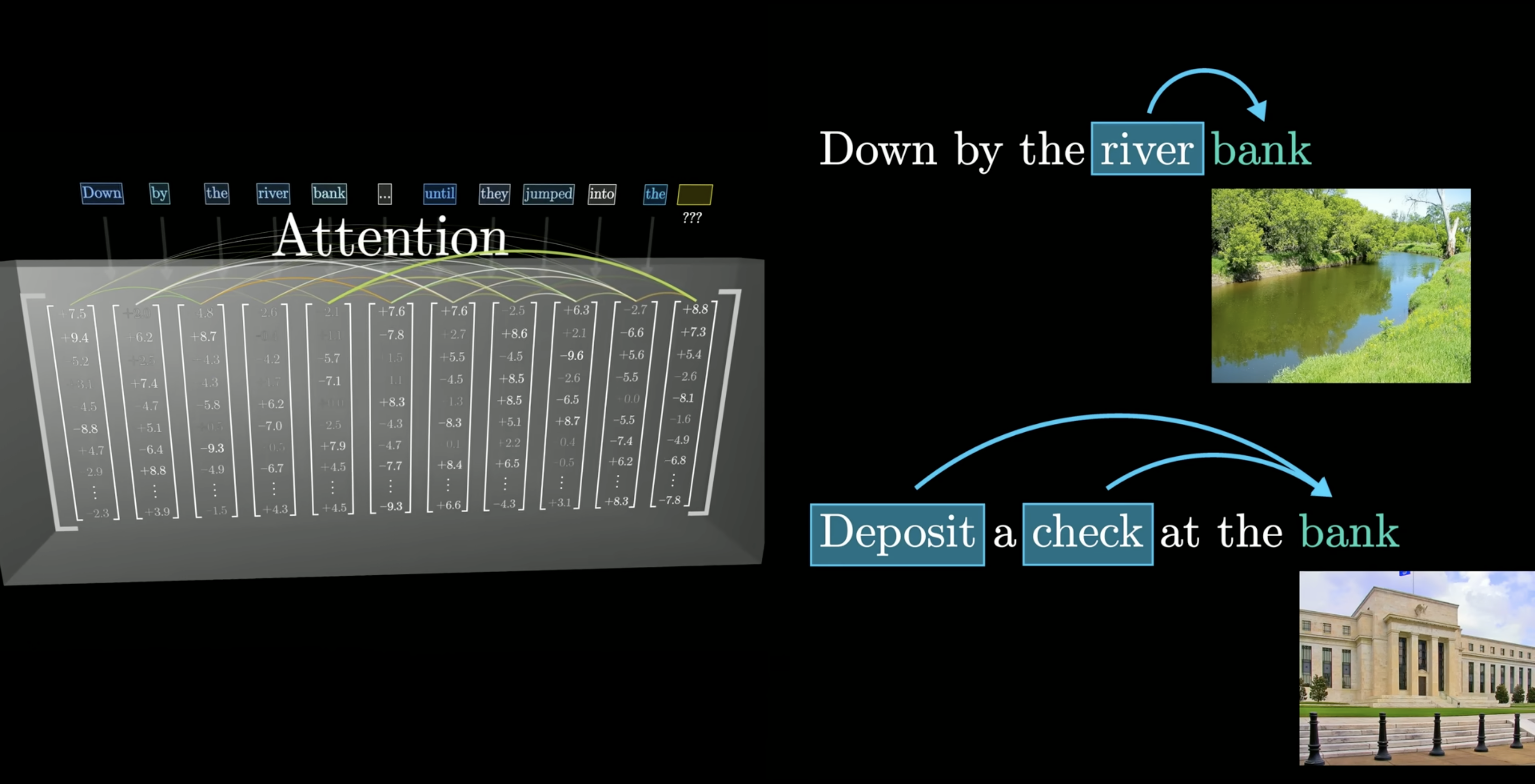

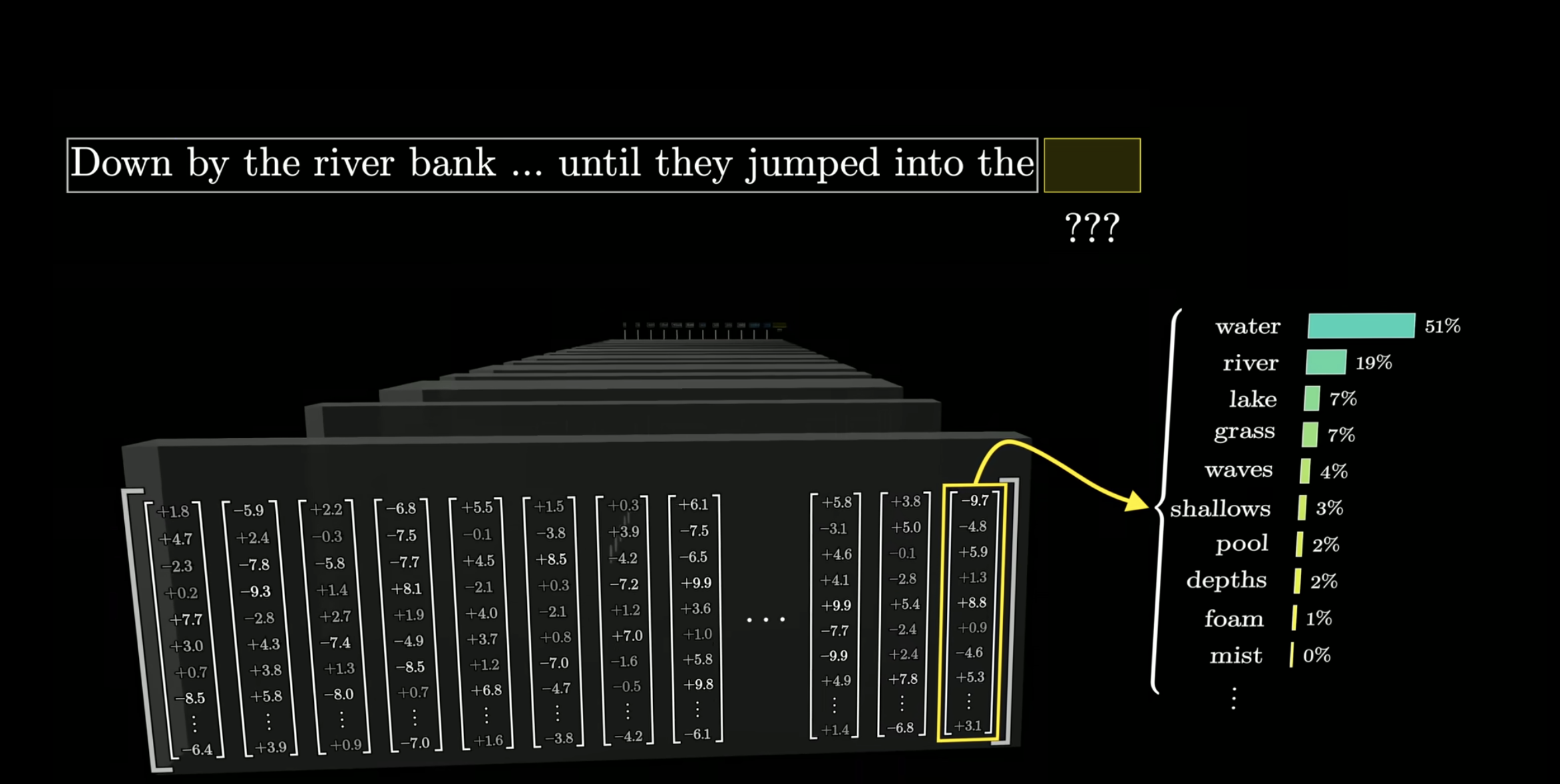

How LLMs work?

Large Language Models explained briefly, by 3blue1brown

How LLMs work?

How LLMs work?

How LLMs work?

How LLMs work?

GPTs are not intelligent, they are probabilistic.

But they are really good at understanding context

Introducing Retrieval-Augmented Generation (RAG)

Enhances language model responses by retrieving relevant information from external sources before generating responses.

How to create your RAG

- Store embeddings

- Search relevant information

- Augment the prompt with context

- Generate the answer

Introducing RubyLLM

Adding the gems

Gemfile

gem "pgvector"

gem "neighbor"

Activate PGVector

db/migrate/create_documents.rb

class CreateDocuments < ActiveRecord::Migration[8.0]

def change

enable_extension "vector"

create_table :documents do |t|

t.text :content

t.vector :embedding, limit: 1536

t.timestamps

end

end

end

Create a document

app/models/document.rb

class Document < ApplicationRecord

has_neighbors :embedding

before_save :generate_embedding, if: :content_changed?

scope :search_by_similarity, ->(query_text) {

query_embedding = RubyLLM.embed(query_text).vectors

nearest_neighbors(:embedding, query_embedding, distance: :cosine).limit(5)

}

private

def generate_embedding

return if content.blank?

begin

self.embedding = RubyLLM.embed(content).vectors

rescue RubyLLM::Error => e

# ...

end

end

end

Importing documents

rails console

Document.create(content: "Company HR policy: Employees must...")

Document.create(content: "Company internal documentation: ...")

documents = Document.search_by_similarity("What is the company's remote work policy?")

documents.each { |document| puts "- #{document.content}" }

Using our RAG

app/controllers/chat_controller.rb

class ChatController < ApplicationController

def ask

documents = Document.search_by_similarity(params[:query])

context = documents.pluck(:content).join("\n---\n")

chat = RubyLLM.chat

chat.with_instruction("You're an assistant answering questions using company documents.")

chat.ask("Context:\n#{context}\n\nQuestion: #{params[:query]}")

end

end

Real world example

app/controllers/projects_controller.rb

class ProjectsController < ApplicationController

def show

chat = RubyLLM.chat

chat.with_instruction("...")

chat.with_instruction(

"Here is the information of the project as json: #{@project.to_json}."

)

chat.ask "Summarize the data in few lines to understand the basic details of this project." do |chunk|

Turbo::StreamsChannel.broadcast_append_to(

@project,

target: dom_id(@project, 'summary'),

content: chunk.content

)

end

end

end

Real world example

app/controllers/projects_controller.rb

class Project < ApplicationRecord

before_save :generate_description

private

def generate_description

return if description.present?

chat = RubyLLM.chat

chat.with_instruction("...")

chat.with_instruction(

"Here is the information of the project as json: #{@project.to_json}."

)

response = chat.ask("Summarize the data in few lines to understand the basic details of this project")

self.description = response.content

end

end

Introducing Tools

app/tools/create_project_tool.rb

class CreateProjectTool < RubyLLM::Tool

description "Create a project"

param :description,

desc: "Project description",

required: true

def initialize(user)

@user = user

end

def execute(description:)

@user.projects.create(description: description)

end

end

Introducing Tools

app/controllers/projects_controller.rb

class ProjectsController < ApplicationController

def index

chat = RubyLLM.chat

ChatInstruction.for_projects.each do |chat_instruction|

chat.with_instruction(chat_instruction.content)

end

chat.with_tool(CreateProjectTool.new(current_user))

chat.ask params[:query]

end

end

Using RubyLLM everywhere

app/controllers/projects_controller.rb

class ProjectsController < ApplicationController

def update

if @project.update(project_params)

EnhanceProjectJob.perform_later(@project)

redirect_to @project

else

# ...

end

end

end

Soon available on https://www.slog-app.com

Let's Wrap Up

- LLMs are probabilistic, which means we can guide their behavior

- It's easy to sprinkle some AI into your codebase with RubyLLM

- No external services or dependencies like no-code

- They can understand your context and fully replace parts of your code when needed

Deploy it with Kamal

Dockerfile

FROM ghcr.io/open-webui/open-webui

config/deploy.yml

service: open_webui

image: guillaumebriday/open_webui

servers:

web:

- 192.168.1.1

proxy:

ssl: true

app_port: 8080

host: open-webui.guillaumebriday.fr

volumes:

- /app/backend/data:/app/backend/data:ro

Deploy it with Kamal

Deploy it with Scaleway